Performance notes

Table of contents

- Main sections

- A. Performance events/metrics and how to track them with code

- B. Custom metrics

- C. Tracking performance metrics with Chrome DevTools

- D. Parse/render-blocking resources

- Links

- TODO

This post contains notes from various resources about web performance. You can use it as a quick reference on web performance, but, ideally, you should read the original articles if you have the time. The performance discussed here relates to how the browser works and optimizing your code around that. It doesn’t have to do with algorithmic efficiency/performance. It’s a work in progress and covers a lot of ground; maybe in the future, I will break it in several posts, add more custom stuff, and make it easier to read. In the following section you can see the topics I cover here.

Main sections

- A. Performance events/metrics and how to track them with code

- B. Custom metrics

- C. Tracking performance metrics with Chrome DevTools

- D. Parse/render-blocking resources

- Links

- TODO

A. Performance events/metrics and how to track them with code

In this section, I list various performance events and metrics, and how you can track them with code. In section C, you can see how to track those events and metrics with Chrome DevTools.

Metrics

- 1. First Paint (FP)

- 2. First Contentful Paint (FCP)

- 3. First Meaningful Paint (FMP)

- 4. Largest Contentful Paint (LCP)

- 5. Flash of invisible text (FOIT)

- 6. Flash of unstyled text (FOUT)

- 7. Speed Index

- 8.

DOMContentLoaded(DCL) - 9. Load (L)

- 10. Time To Interactive (TTI)

- 11. Long tasks

When you use code to track performance, you measure performance in the real world, as opposed to using the Chrome DevTools (or a tool like Lighthouse) where you measure performance in lab conditions. That by itself is an advantage of the code tracking over the lab testing. With code you can also capture the performance data to analyze them later, and, after you do that, you can decide what you want to improve in your app. They are both useful techniques though. In parentheses, you can find the event acronyms, as they appear in Chrome DevTools.

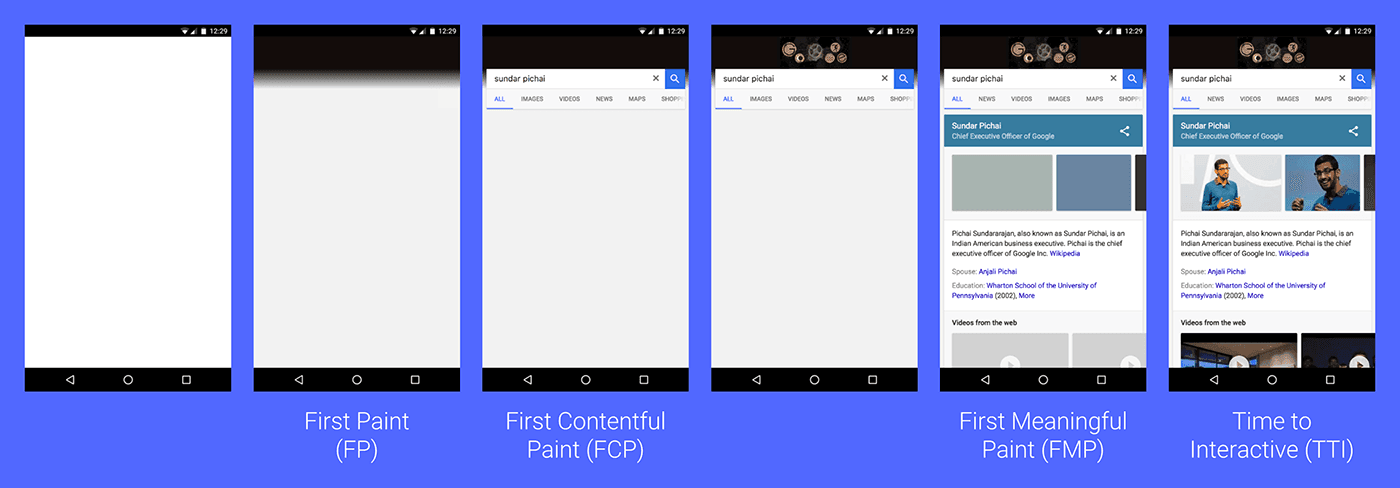

The following image and notes are from a Philip Walton’s post named User-centric performance metrics, that was originally posted on Google developers site. The article changed and moved to web.dev, but you can find the original article on GitHub.

The image shows some of the following metrics over time.

Technologies used

The code snippets in this section use the following technologies to track performance with code:

- You can handle and log the performance events with a Performance observer. With the performance observer you listen for specific performance entries:

- These are the performance entries and their attributes.

- These are the Types of the Performance entries.

- You can also create custom events with the User Timings API. React, for example, uses this API in development mode.

1. First Paint (FP)

This is the first time the browser renders something on the screen. Even a blank screen can be considered a “first paint”, as long as it comes from your code (you may have a white background for example).

2. First Contentful Paint (FCP)

The browser rendered some elements from your app. Those elements can be a navbar, an app shell, etc, but the result doesn’t have to be useful to the user. In other words, the user can’t use your app yet, but they see something happening.

Tracking FP and FCP

You can track the first paint and the first contentful paint by creating a PerformanceObserver and listening for paint entries, as shown in the following code block:

<script>

const observer = new PerformanceObserver((list) => {

for (const entry of list.getEntries()) {

const { name, startTime } = entry;

console.log({ name, startTime });

}

});

observer.observe({ entryTypes: ["paint"] });

</script>- The Paint Timing API in W3C.

- Sending data to Google Analytics: Philip Walton: Tracking FP/FCP.

3. First Meaningful Paint (FMP)

The first meaningful paint is the time when the most important part of the page, also known as the hero element, comes into view. For a blog, the hero element would be the post text, for a video app the video.

Tracking FMP

Tracking FMP is challenging and differs from application to application. As I mentioned before, sometimes the hero element will be text, sometimes an image, and sometimes a video. The following articles show some ways you can track FMP:

- Philip Walton explains how to track FMP using hero elements.

- The article above leads to an article by Steve Souders posted on Speedcurve.com that’s called User timing and custom metrics.

- This, in turn, leads to an old article, again by Steve Souders, called Hero image custom metrics.

4. Largest Contentful Paint (LCP)

The largest contentful paint (LCP) is a recent alternative to FMP and marks the time the largest content of the page becomes visible.

- Largest Contentful Paint on W3C (unofficial draft).

- Philip Walton’s article on web.dev called ”Largest Contentful Paint (LCP).”

The next two sections have to do with font loading performance.

5. Flash of invisible text (FOIT)

The flash of invisible text (FOIT) happens when the fonts are still downloading, and the browser decides to render the page using invisible text. In other words, instead of text, the browser renders some empty blocks. When the original font downloads, the browser uses the font file to render the text inside those empty blocks. This is the default behavior you get (it depends on the browser) if you don’t specify the font-display property of a @font-face. The end of FOIT usually marks the first meaningful (or contentful) paint. The FOIT is used when the original font is too important for the design (the layout breaks without it or doesn’t make sense anymore), or when the fallback font will cause big text reflows.

6. Flash of unstyled text (FOUT)

The flash of unstyled text (FOUT) happens when the fonts are still downloading, and the browser decides to render the text in the fallback font. When the original font finishes downloading, the browser swaps the fonts. For this to happen over FOIT, you’ll have to use font-display: swap in the @font-face declaration. It’s not an ideal user experience—due to page reflows/jumps—but it’s good for performance. At least with this method the user sees the text. You can minimize/eliminate it by loading your fonts in two stages.

Measuring FOIT/FOUT

You can track when a font loads if you load the font with the CSS Font Loading API (see caniuse support), or if you use a 3rd-party package like the Web Font Loader. The following code snippet shows how to load a font with the CSS Font Loading API:

<script>

if ("fonts" in document) {

var bold = new FontFace(

"Mali",

"local('Mali Bold'), local('Mali-Bold'), url(/fonts/Mali-Bold.woff2) format('woff2'), url(/fonts/Mali-Bold.woff) format('woff')",

{ weight: "700" }

);

bold.load().then(function (font) {

document.fonts.add(font);

const endOfFOUT = performance.now();

console.log({ endOfFOUT });

});

}

</script>7. Speed Index

Speed Index is another old metric that measures how quickly the content of the page is populated during page load. It’s a metric available in Lighthouse and is considered important. See the Speed Index article on web.dev.

8. DOMContentLoaded (DCL)

The DOMContentLoaded (or DCL) event happens when the initial HTML document has been loaded and parsed. The catch here is that you ignore external stylesheets, images, or subframes that are still downloading. It’s one of the oldest performance events that doesn’t necessarily reflect a good user experience if it’s low.

Tracking DCL event

There is an event listener available if you want to track DCL:

document.addEventListener("DOMContentLoaded", (e) => {

console.log({ DCL: e.timeStamp });

});9. Load (L)

The Load event happens when the page has been parsed and loaded, but, in contrast to DCL, all the resources have finished downloading.

Tracking Load event

As with DCL, you can track the Load event with a listener:

window.addEventListener("load", (e) => {

console.log({ Load: e.timeStamp });

});10. Time To Interactive (TTI)

The Time To Interactive (or TTI) metric marks the point at which the main JavaScript has loaded, and the main thread is idle (free of long tasks). At that point, the page can reliably respond to user input, thus the “interactive” in the name of the metric. If the app is not interactive, that means that the users may press buttons without seeing something happening. Philip Walton explains Time to Interactive on web.dev.

Tracking TTI

As with FMP, TTI can be challenging to track. You can track TTI with the help of a polyfill from Google. You can find up-to-date instructions on how to use the polyfill in the GitHub link, but I will also do that here. You can install it with NPM:

npm install tti-polyfill

You then place this inline script at the top of the head, before any other script (this step may be outdated, see the GitHub link):

<script>

// prettier-ignore

!function(){if('PerformanceLongTaskTiming' in window){var g=window.__tti={e:[]};

g.o=new PerformanceObserver(function(l){g.e=g.e.concat(l.getEntries())});

g.o.observe({entryTypes:['longtask']})}}();

</script>Then, you can use the polyfill inside your code:

import ttiPolyfill from "./path/to/tti-polyfill.js";

ttiPolyfill.getFirstConsistentlyInteractive(options).then((tti) => {

// Use `tti` value in some way.

});This code will probably be part of the main.js file. It’s not a bad idea to separate it from the main bundle and add it as a new entry point—if you control the Webpack/Rollup configuration (TODO: why?).

11. Long tasks

Long tasks are tasks that block the main thread for a long time. This results in a lower frame rate, and the app is not able to respond fast to user input. In the context of page loading, compiling and executing a large JavaScript bundle can be considered a long task. Philip Walton: Long tasks API.

Monitoring long tasks

<script>

const observer = new PerformanceObserver((list) => {

for (const entry of list.getEntries()) {

const { name, startTime, duration } = entry;

// to distiguise them from "paints"/"measure"

if (name === "self")

console.log({

// long tasks have an attribution field.

"long-task": { duration, attribution: entry.attribution },

});

}

});

observer.observe({ entryTypes: ["longtask"] });

</script>Long Tasks API on W3C.

B. Custom metrics

In addition to the standard metrics and events you saw in the previous section, it may be useful to track some custom metrics that are not covered from the existing APIs.

In the following list, you can find the custom metrics I mention in this section:

- 1. Tracking input lag

- 2. Track performance to see how it affects business

- 3. Tracking load abandonment

- 4. Stylesheets done blocking

- 5. Scripts done Blocking

1. Tracking input lag

You can track input events that take too long to process and, as a result, can cause drops in frame rate—something that your users will notice. The following code snippet measures the time a user clicks a button until the time the handler finishes executing. You get the exit time with performance.now(), at the end of the handler, and the click time with event.timeStamp:

button.addEventListener("click", function inputLag(event) {

// Do work here, then track.

const lag = performance.now() - event.timeStamp;

if (lag > 100) console.log({ event, lag: Math.round(lag) });

});Input events that take long to process are long tasks, and you already saw how to track long tasks. By tracking input lag, you can see which piece of code caused a long task.

While this technique isn't perfect (it doesn't handle long event listeners later in the propagation phase, and it doesn't work for scrolling or composited animations that don't run on the main thread), it's a good first step into better understanding how often long-running JavaScript code affects user experience.

2. Track performance to see how it affects business

Philip Walton: How performance affects business.

3. Tracking load abandonment

Philip Walton: Load abandonment.

4. Stylesheets done blocking:

The external stylesheets (<link rel="stylesheet" />) block the page from rendering until they get downloaded and parsed. As a result, it’s useful to know when these stylesheets are done blocking. The code snippet below shows how to measure that:

<link rel="stylesheet" href="/sheet1.css" />

<link rel="stylesheet" href="/sheet2.css" />

<style>

/* Critical inline CSS */

</style>

<script>

performance.mark("stylesheets done blocking");

</script>This method is from an article by Steve Souders posted on Speedcurve called ”User timing and custom metrics.”

5. Scripts done Blocking

In the same article, Steve Souders explains how to do the same thing for the synchronous scripts (those without async or defer). In the following snippet, we mark the time that script a.js has finished downloading, parsing, and executing. Script b.js will most probably be executed after the inline script because it needs some time to download and doesn’t block the rendering.

<script src="a.js"></script>

<script src="b.js" async></script>

<script>

performance.mark("scripts done blocking");

</script>C. Tracking performance metrics with Chrome DevTools

This section shows how I track the performance metrics and events from section A with Chrome DevTools. You can find most of the information here on the ”Performance Analysis Reference” by Kayce Basques that available at the Google developers site.

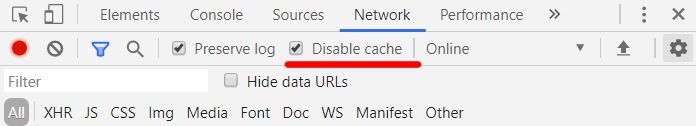

Setup

To give yourself more room to work, open the DevTools and undock them into a separate window instead of having them at the right or bottom. To do that press the three dots at the top right and select the first option. Make sure you have the “Disable cache” option checked in the network tab, as shown in the image below:

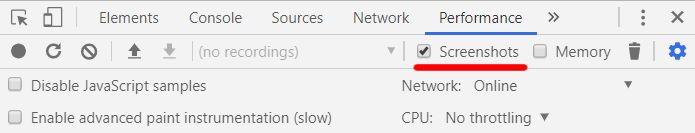

You should also have the “screenshots” option checked in the performance tab. This option will allow you to see screenshots of your app as it loads in the Frames dropdown while you are inside the performance tab.

Press Ctrl + Shift + P and type “Clear site data”. Maybe the app you’re measuring stores data in the local storage or uses IndexedDB. While you are in the performance tab, press Ctrl + Shift + E or the circular arrow button at the top left to “start profiling and reload the page”. For a more realistic scenario, you can emulate an average mobile device by selecting: “Network: Fast 3G” and “CPU: 4x slowdown”. To run repeated tests on the page (something necessary when you measure performance), you can do the following to speed up the process. Copy the following string in your clipboard:

> Clear site data

Then press Ctrl + P to bring up the command palette, paste the text, and press enter. This way, you can clear the site data with 3 keystrokes.

Measure metrics represented by vertical lines

FP, FCP, FMP, LCP, DCL, and Load are all represented by vertical lines in the performance tab. To measure accurately one of these metrics, you can do the following. Select a small portion around the line in the overview with left-click and drag—or just left-click should be enough. Then, zoom in with the mouse until you’re in the range of milliseconds. You can then write down the milliseconds number that’s under the overview graph. The following gif shows how to do that:

You can alternatively use the keyboard. Focus on an area under the overview, and press W/S to zoom-in/zoom-out. You should see the selected area changing in the overview. You can move the selection left and right by pressing A/D respectively.

Measure the end of FOIT/FOUT

If you want to measure the end of FOIT or FOUT, you won’t have vertical lines guiding you, so you’ll have to rely on the screenshots in the overview. Identify the point at which the end of FOIT/FOUT happens (the point when the fonts switch), and select from that point until the start of the recording. The following gif shows how to do that:

What you want to do now is to measure the duration of the area you selected. Move the mouse to the area below the overview. Find the start, hold Shift + left-click and drag until the end. At the bottom-middle, you can see the duration of the area you highlighted. You can’t highlight the network overview area—if you have opened it—so you’ll have to drag below that area. The following gif shows that process (notice that I highlight below the open network area):

I want to point out that, sometimes, the screenshots in the overview are not consistent. If that’s the case, to find the exact time the FOIT/FOUT ends, search in the main thread’s flame graph for a paint activity. The point where the old frame ends and the new frame starts—indicated by dotted lines—is the end of FOIT/FOUT. Opening the frames overview can help you figure out where the frames start and where they end.

To remove the selection, double click inside the overview to select the whole recording. Then, click outside of the highlighted area under the overview, as shown below:

For more accurate measurements, repeat this process a few times and calculate the averages in a spreadsheet. Keep in mind, though, the fastest and most accurate way to measure performance is with code. Also, the screenshots in the Frame and the overview area may be inconsistent between different versions of the DevTools. For example, the DevTools in Chrome version 80 don’t show the correct screenshots for my blog, but the DevTools in the canary version 83 show them right.

Monitoring activities with table forms

You can view the main thread activities (function calls) in a table form, instead of viewing them in the standard flame graph that’s shown in the Main area of the recording. In a table form, you can inspect the self-time of the activities, not only the total time you see in the flame graph. You can also group them in interesting ways. For example, you can group the activities by domain, activity, category, and more. You can find the available table forms at the bottom of the performance tab. These are the available table forms:

- Bottom-Up: Use it to find out which activities are the most expensive. To activate that view, select the Main area in the performance tab. Then, click the “Bottom-Up” tab that’s next to the default selected tab called “Summary” (at the bottom of the screen). There you can group the activities, or you can sort them by self-time or total time. In this view, the children of the activities—the activities that show up when you expand a parent activity—are not the functions that were called by the activity, but they are the functions that called it. The opposite is true for the next two sections.

- Call tree: View the call stack. Each parent activity is a root activity such as Event Click, Paint, etc.

- Event log: View the activities in chronological order.

Other tips

- You can force Garbage Collection (GC) in the performance tab by clicking on the trash icon at the top of the performance tab.

- Read how to Understand network diagrams from the Performance analysis reference.

D. Parse/render-blocking resources

This section contains notes from an article by Milica Mihajlija on Mozilla Hacks that’s called ”Building the DOM faster: speculative parsing, async, defer and preload”, and from the script element’s reference page on MDN.

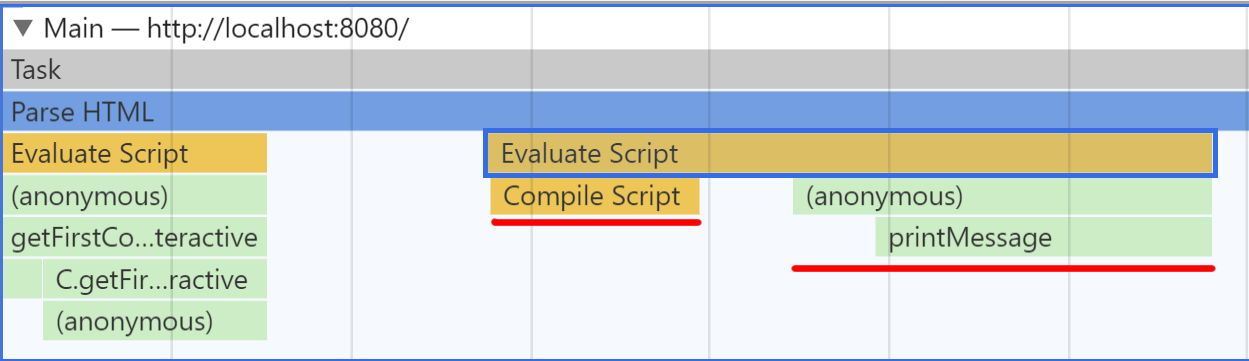

Regular scripts block parsing and rendering during the time they need to download, get parsed, and execute (parsing and execution time is called script evaluation in DevTools). This includes inline scripts—minus the download time—and external scripts without

asyncordeferattributes.asyncscripts don’t block parsing/rendering when they are downloading (they also have low download priority), but they may block when they finish because they get parsed and executed right away (when the main thread is available).deferscripts are likeasyncscripts—in that they don’t block and have low download priority—but they don’t get evaluated the moment they finish downloading. They get evaluated the time the browser finishes parsing the HTML and before the DCL event. Also, compared toasyncscripts, they are evaluated in the order they appear in the HTML. You can usedeferfor scripts that depend on each other (for example, a defer script that imports a library and right below a defer script that uses that library) andasyncfor independent scripts.Parsing and execution are referred to as evaluation by the Chrome DevTools. This includes script compilation and execution. Take a look at the following inline script:

<script> function printMessage(message) { console.log(message); } printMessage("inline"); </script>In the following image, you can see what the script looks like when it gets evaluated by the browser. Notice that the evaluation activity contains the compilation and the execution of the script. The horizontal axis represents time and the vertical axis the call-stack:

Evaluate script activity in DevTools Preload links start downloading right away with high download priority. You can use them in combination with

asyncordeferscripts that are placed further down in the document. Keep in mind that browsers already do some optimizations like speculative parsing. Speculative parsing is an optimization that runs off the main thread where the browser starts downloading critical resources that may be needed later in the page.Downloading scripts right before the closing body tag doesn’t block rendering or the DCL event. That’s why it’s considered good practice to place your blocking scripts right before the closing

bodytag.Downloading CSS doesn’t block parsing (like downloading scripts) but it still blocks rendering. This happens because we don’t want flashes of unstyled content—displaying the page content without having the styles. That’s why we place CSS at the top of the head. To prevent render blocks while the style downloads, you can place the CSS in

styletags (inline). It will be even better if you inline only the critical CSS (the CSS needed for the current page to render), and use a preload link for the rest of the styles:<style> /* Critical CSS */ </style> <link rel="preload" href="style.css" as="style" onload="this.onload=null;this.rel='stylesheet'" /> <noscript> <link rel="stylesheet" href="style.css" /> </noscript>To extract the critical CSS, you can use the critical tool. To support browsers that don’t recognize the preload links, use the

loadCSSpolyfill.You can find more information about critical CSS in an article by Demian Renzulli on web.dev that’s called ”Defer non-critical CSS” and from an article by Milica Mihajlija called ”Inline critical CSS“.

Links

- Performance Analysis Reference by Kayce Basques on Google developers site.

- User-centric performance metrics by Philip Walton on web.dev, and the old article on GitHub.

- User timing and custom metrics by Steve Souders on speedcurve.com.

- A collection of articles on how to achieve Fast load times on web.dev.

- Building the DOM faster: speculative parsing, async, defer and preload by Milica Mihajlija on Mozilla Hacks.

- User Timing API on MDN.

- See also a series of posts by Glenn Conner called ”Making Instagram.com faster“.

- Profiling React.js Performance by Addy Osmani. The links at the bottom are useful.

- Progressive React by Houssein Djirdeh.

TODO

- Many performance metrics and code blocks are outdated.

- Add and update metrics: https://youtu.be/iaWLXf1FgI0 @ 7:50 or at web.dev/metrics/

- @8:37: How lighthouse score is calculated.

- @11:20: Use page speed insights API as a cronjob to measure performance.

- bit.ly/perf-variance is an article that explains performance variance under the same lab conditions.

- @ about 17:00: An example of a lighthouse plugin.

- Check lighthouse CI: bit.ly/lhci and bit.ly/lhci-action

Other things to read

Popular

- Reveal animations on scroll with react-spring

- Gatsby background image example

- Extremely fast loading with Gatsby and self-hosted fonts